We’re excited to share our latest publication, “Unlocking a Million Times More Data for AI“, now featured on the Institute for Progress (IFP) blog as part of their Launch Sequence collection. The collection includes ambitious ideas designed to accelerate AI development for science and security applications.

The Problem: The Data Paradox

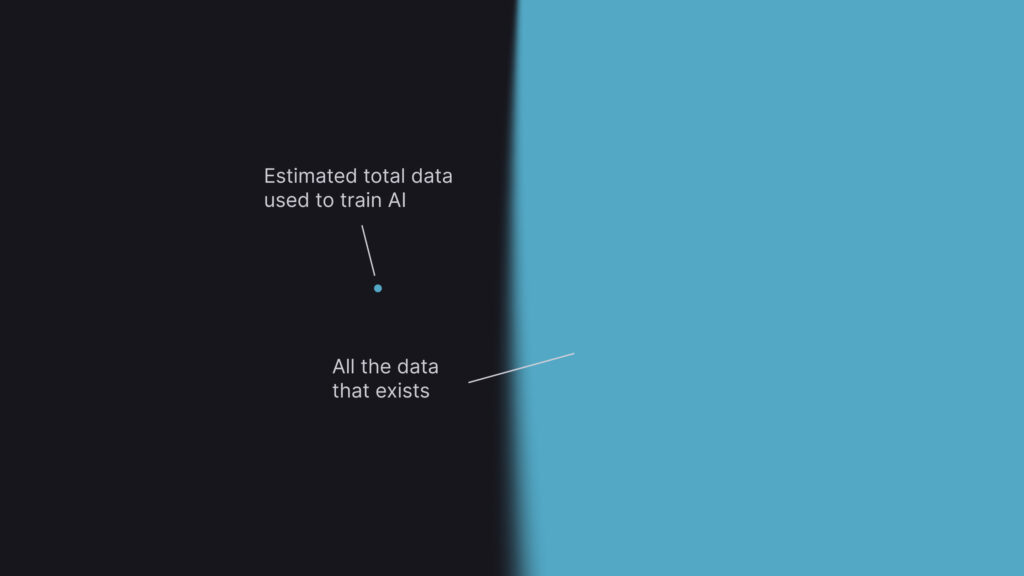

While AI leaders warn we’ve hit “peak data,” our analysis reveals a striking reality. Today’s AI models are trained on datasets measuring in hundreds of terabytes—small enough to fit on hard drives on your kitchen table. Yet, the world has digitized approximately 180-200 zettabytes of data, which is over a million times more. The challenge isn’t scarcity; it’s access.

The Big Idea: Attribution-Based Control

Current AI systems are like giant blenders—once you contribute your data, you lose all control over how it’s used. This fundamental design flaw explains why hospitals, banks, and research institutions refuse to share valuable datasets that could advance AI capabilities. They know that sharing means permanently relinquishing control, creating competitors, and forfeiting any future value from their data.

Attribution-Based Control (ABC) transforms this dynamic by ensuring:

- Data owners control which AI predictions their data supports

- AI users control which data sources contribute to their received predictions

This creates a sustainable data economy where contributors maintain ownership while generating ongoing revenue, much like how musicians earn royalties.

The Path Forward: Why Governments Must Lead

The private sector has developed remarkable AI capabilities, but market forces actively hinder the cooperation necessary for AI’s next breakthrough. When companies share data, they lose control, as every recipient can resell or reuse that same data, potentially creating new competitors. This fundamental economic problem explains why tech giants hoard data rather than sell it. Building data moats for competitive advantage is a rational business strategy, but it’s the opposite of what AI advancement requires.

This isn’t a new problem. In the 1960s, computing giants had no business incentive to connect their isolated computing systems. It was the United States government’s vision, facilitated through ARPANET, that helped overcome these market failures and create the internet. Today’s data crisis demands similar government intervention precisely because market incentives push against the cooperation AI needs to advance. Only government has the convening power to align competing stakeholders, the resources to de-risk unproven infrastructure, and the mandate to prioritize collective progress over individual profit. Without this leadership, AI will remain constrained by the data each company can acquire on its own—a tiny fraction of the world’s digital knowledge.

Why This Matters for OpenMined

For nearly a decade, OpenMined has been pioneering the privacy-preserving technologies that make ABC possible, and we’re actively building the infrastructure that enables data to be useful without being exposed. This proposal represents the convergence of years of technical development with the policy frameworks needed to create real-world impact. It’s not just about building better technology—it’s about creating the foundation for a new data economy where every organization can participate in AI progress while maintaining sovereignty over its most valuable asset: its data.

Read the full piece on the Institute for Progress blog to learn more about how we can move from data scarcity to data abundance while preserving privacy and control.